The complete code, including deployment via CDK and a GitHub Action, can be found on GitHub.

I recently stood up a couple of super simple functions in AWS Lambda to collect email addresses on landing pages for marketing websites. They were written in F# using .NET Core 5 and talk to DynamoDB and while they worked fine the cold start was pretty poor. As they are really doing very little I’d originally provisioned them with 128Mb of memory (to which Lambda assigns a corresponding proportion of a CPU) but was seeing cold start times greater than 3 seconds. I pushed up the memory allocated to the Lambda and the cold start time fell to around 1 second.

To run in .NET 5 they were using Lambda’s docker container support (its just such a convenient way of packaging and deploying code) but that carries a cold start penalty. I moved them to .NET Core 3.1 and ran them without the container and it shaved a small amount of time off but they were still laggards. I would surmise that .NET Core requires a reasonable (in serverless terms!) amount of horsepower to launch its runtime - certainly a disproportionate amount when compared to the simple code I wanted to run. This is fairly well known and Mikhail Shilkov has some excellent posts on the cold start performance of both AWS Lambda and Azure Functions.

At this point things were ok but I really wanted to see something under 0.5 second and with only a small amount of RAM and CPU allocated. It seemed something of an affront to have to allocate 2Gb of RAM to collect an email address - I’m running at a low level of load but the amount of resource I was needing to assign would definitely hit me in the pocket if I was running a busy site.

I still wanted to use F# though - I wanted, so to speak, to be able to have my cake and eat it. My recent solutions are all end to end F# and its nice not to have to pay the cognitive load tax of switching modes into JavaScript (likely TypeScript in my case) and back. I want the expressiveness and robustness of F# without the startup penalty of the .NET runtime.

Fable to the rescue

The solution I came up with was to use the Fable Compiler to generate JavaScript Lambda’s from my F# code. If you’ve not come across Fable before its a fantastic solution for transpiling F# code into JavaScript. Its most commonly used with React to create single page applications, which is primarily how I use it, but their is nothing intriniscally React-y about it and you can use it to work with Node or any other part of the JavaScript ecosystem.

Interacting with Lambda

One of the things you need to get your head round with Fable is that you are not running in .NET and so while most of the core of the .NET frameworks are available to you (thanks to the hard work of the Fable team) when you interact with dependencies you are really working with JavaScript packages. That being the case I couldn’t just use the AWS Lambda NuGet packages and had to do a little work to be able to create a Lambda compatible function - specifically as my functions were using the API Gateway events I needed to be able to access the request data and provide a response. These are ultimately expressed as JavaScript objects but to use them easily within F# we need to create a few types so we can use them safely from JavaScript:

type ProxyRequest =

{ resource: string

path: string

httpMethod: string

headers: obj

multiValueHeaders: obj

queryStringParameters: obj

multiValueQueryStringParameters: obj

pathParameters: obj

stageVariables: obj

requestContext: ProxyRequestContext

body: string

isBase64Encoded: bool

}This references the ProxyRequestContext and their are a handful of other types involved. They can be found in the AWSLambda.Types module. Query string parameters, headers, etc. are represented as plain JavaScript objects and of a type we can’t predetermine. To provide safe access to these their are a number of utility functions, for example:

let private safeGetFromObj objDictionary (propertyName:string) =

if objDictionary = null then

None

else

let possibleValue = objDictionary?(propertyName)

if possibleValue = null then None else possibleValue.ToString() |> Some

let getQueryParameter request = safeGetFromObj request.queryStringParameters(Thanks to help from Zaid Ajaj I’ve now been able to do this without the helper methods and get rid of the obj types and instead expose a map - code is being updated shortly but I’ll leave this blog post as is and post a second article on how you do this)

Although I’ve not used it here I also created a type for the Lambda context as this is so frequently used:

type LambdaContext =

{ awsRequestId: string

clientContext: ClientContext

functionName: string

functionVersion: string

identity: CognitoIdentity

invokedFunctionArn: string

logGroupName: string

logStreamName: string

memoryLimitInMB: int

remainingTime: int

}Again related types can be found in the module.

That provides the types for the entry to our Lambda but how do we send back the response? When using API Gateway their is, again, a object format for this which I’ve mapped onto an F# type:

type ProxyResponse =

{ statusCode: int

headers: obj

multiValueHeaders: obj

body: string

isBase64Encoded: bool

}

static member Empty =

{ statusCode = 500

headers = createObj [ ]

multiValueHeaders = createObj []

body = ""

isBase64Encoded = false

} I’ve also created a few utility functions to let us easily form up common response types, for example:

type BodyType<'a> =

| Json of 'a

| PlainText of string

let ok bodyType =

let contentType,body =

match bodyType with

| Json value -> "application/json", JSON.stringify value

| PlainText value -> "text/plain",value

{ ProxyResponse.Empty with

body = body

headers = createObj [ "Content-Type" ==> contentType ]

statusCode = 200 }Again all this code can be found in the AWSLambda.Types module.

Implementing the Lambda

To demonstrate this approach works we’re going to implement a really simple Lambda that echoes back a message provided in a query parameter and after our setup work this is actually really simple and can be seen below in the App.fs module:

module App

open AWSLambda.Types

open AWSLambda.Types.APIGateway.Request

open AWSLambda.Types.APIGateway.Response

// Our simple Lambda - its bound to an API Gateway proxy trigger and recieves the proxy request

// as its event. The second, unused here, parameter is the Lambda context (of type LambdaContext).

let echo (event: ProxyRequest, _) = promise {

lambdaLogger "Entered lambda"

return

match (getQueryParameter event "message") with

| Some value -> {| echo = value |} |> Json |> ok

| None -> "No message" |> PlainText |> ok

}You can do basic testing of Lambda locally (though the experience is nowhere near as good as Azure Functions) but I’m not going to cover that here - we’ll move straight onto deploying this and showing that it works. If you are interested in the local debug experience Amazon have this covered in their documentation.

Building and deploying

Ok. So now we’ve got some F# code that we should be able to compile into JavaScript and deploy into AWS. This is a two part process of compiling and bundling the code and then creating our AWS infrastructure and pushing our build into it.

From F# to deployable JavaScript

If you’ve downloaded my GitHub repository then before continuing you’ll need to run the following:

dotnet tool restore

npm installThis will install the Fable compiler as a .NET tool and Parcel as a dev dependency. To compile the F# into JavaScript you then need to run the command:

dotnet fable .\src\AWSLambda.fsproj --outDir "./build/fable"That will create a bunch of JavaScript files in the subfolder build/fable. If you inspect them you’ll see something like this for App.js (our App.fs has been transpiled into App.js):

import { PromiseBuilder__Delay_62FBFDE1, PromiseBuilder__Run_212F1D4B } from "./.fable/Fable.Promise.2.1.0/Promise.fs.js";

import { APIGateway_Response_BodyType$1, APIGateway_Response_ok, APIGateway_Request_getQueryParameter, lambdaLogger } from "./AwsLambda.Types.js";

import { promise } from "./.fable/Fable.Promise.2.1.0/PromiseImpl.fs.js";

export function echo(event, _arg1) {

return PromiseBuilder__Run_212F1D4B(promise, PromiseBuilder__Delay_62FBFDE1(promise, () => {

let matchValue;

lambdaLogger("Entered lambda");

return Promise.resolve((matchValue = APIGateway_Request_getQueryParameter(event)("message"), (matchValue == null) ? APIGateway_Response_ok(new APIGateway_Response_BodyType$1(1, "No message")) : APIGateway_Response_ok(new APIGateway_Response_BodyType$1(0, {

echo: matchValue,

}))));

}));

}Its not going to win any prizes for readability but for this simple example you can see the parallels between the code (Fable can also produce source maps if you’re using a debugger).

We’ve also got a lot of dependencies and we’re going to need to do a bit more work to bundle things together before we can run this in Lambda. For this we’ll use Parcel which takes a convention based approach to bundling and in fact all we really need to do is point it at the entry point - in this case App.js:

parcel build ./build/fable/App.js --target=node --bundle-node-modules --no-minify --out-dir ./build/distThat will create a single file (and a source map) in the build/dist subfolder called App.js. I’m not going to show it as its 2500 lines long as it includes all our dependencies.

With this step done we now have something we can deploy to Lambda.

I’ve wrapped those steps up into an npm script (build) so you can create the required output with a single command:

npm run buildOn into the cloud with CDK

If you’re coming to AWS from Azure (which if you’ve landed here from my Twitter feed is quite likely) one of the things you quite quickly come to realise is that AWS is a little more low level and more of a set of building blocks than Azure which can feel a bit more monolithic.

The downside is that there can be a steep initial learning curve on AWS (you can’t escape IAM and VPC for long) and more “parts” to deploy. The upside is that it can feel more consistent as an overall solution - for example in one of my projects that had strict security constraints I was able to use a database on a private subnet (no Internet exposure) with Lambda without having to upgrade to a “premium” offering. But this is stuff for another time - what do we need to deploy here? The below:

- A Lambda execution role - the permissions that will be assigned to our Lambda

- A Lambda function

- An API Gateway

- An API Gateway trigger for our Lamdba

- A route in our API gateway that links to our trigger

We’re going to do this using the AWS Cloud Development Kit (CDK) which builds on top of CloudFormation (think ARM if you use Azure) to let you specify deployments using a familiar full featured language and runtime of your choice. Their are many benefits to this: no irritating niche language to learn, no coding in markup, ability to leverage an existing ecosystem and existing tooling - I’m a big fan of this and if you’re on Azure I’d encourage you to take a look at Farmer or Pulumi - the latter of which works with multiple cloud vendors. We’re going to use F# and .NET (Core 3.1) here.

I don’t propose to walk through each part of this but rather just focus on a couple of parts of the CDK as used here. The full code can be found in the infrastructure folder.

CDK works through two main concepts: stacks and constructs. Stacks are collections of constructs that can be deployed to an environment. A construct represents one or more resources in the cloud. A construct can also contain other constructs.

In .NET stacks inherit from the stack type and so my stack declaration looks like this:

type LambdaStack(scope, id, props) as stack =

inherit Stack(scope, id, props)

// ...A typical construct within the stack would be our Lambda which also shows off a feature I really like about CDK:

let echoFunction =

Function(

stack,

"fableecholambda",

FunctionProps(

Runtime = Runtime.NODEJS_12_X,

Role = lambdaRole,

Code = Code.FromAsset("./build/dist"),

Handler = "App.echo",

MemorySize = 256.

)

)Each of our constructs is associated with a stack, has a name, and then properties. One of the things I’ve come to appreciate about CDK is how easy it is to deploy code and assets - in this case we literally just point CDK at the distribution we created earlier and it will shift the code into Lambda. You can take the exact same approach with containers - just point CDK at your Dockerfile and it will build it and shift it both on Lambda and on other services such as ECS and, unless you want to, there’s not even any need to bother with container registries.

At the end of our CDK deployment we also want the URL for our API Gateway so we can test the trigger we can do that by defining an output:

do CfnOutput(stack, "apiGatewayUrl", CfnOutputProps(ExportName=("apiGatewayUrl"), Value=fableLambdaApi.Url)) |> ignoreIf you’re integrating these into complex GitHub actions and CI/CD pipelines a neat approach is to have them output as JSON and translate them into step actions, one for another blog post perhaps!

Finally we need an entry point that instantiates our stack. Our app is really just a console app and so our entry point looks like this:

open Amazon.CDK

open Infrastructure

[<EntryPoint>]

let main _ =

let app = App(null)

LambdaStack(app, "FableLambdaStack", StackProps()) |> ignore

app.Synth() |> ignore

0With all that done, and if you’ve got AWS CLI installed and credentials configured, then you can deploy with a simple command:

cdk deployIf you’ve not used it in a region before you will be asked to bootstrap the region - CDK uses AWS resources to do its work and lifting. I think this is rather neat - you’ll see Lambda’s getting created and destroyed to do things and its a testament to the AWS infrastructure that you see it being dogfooded in this way.

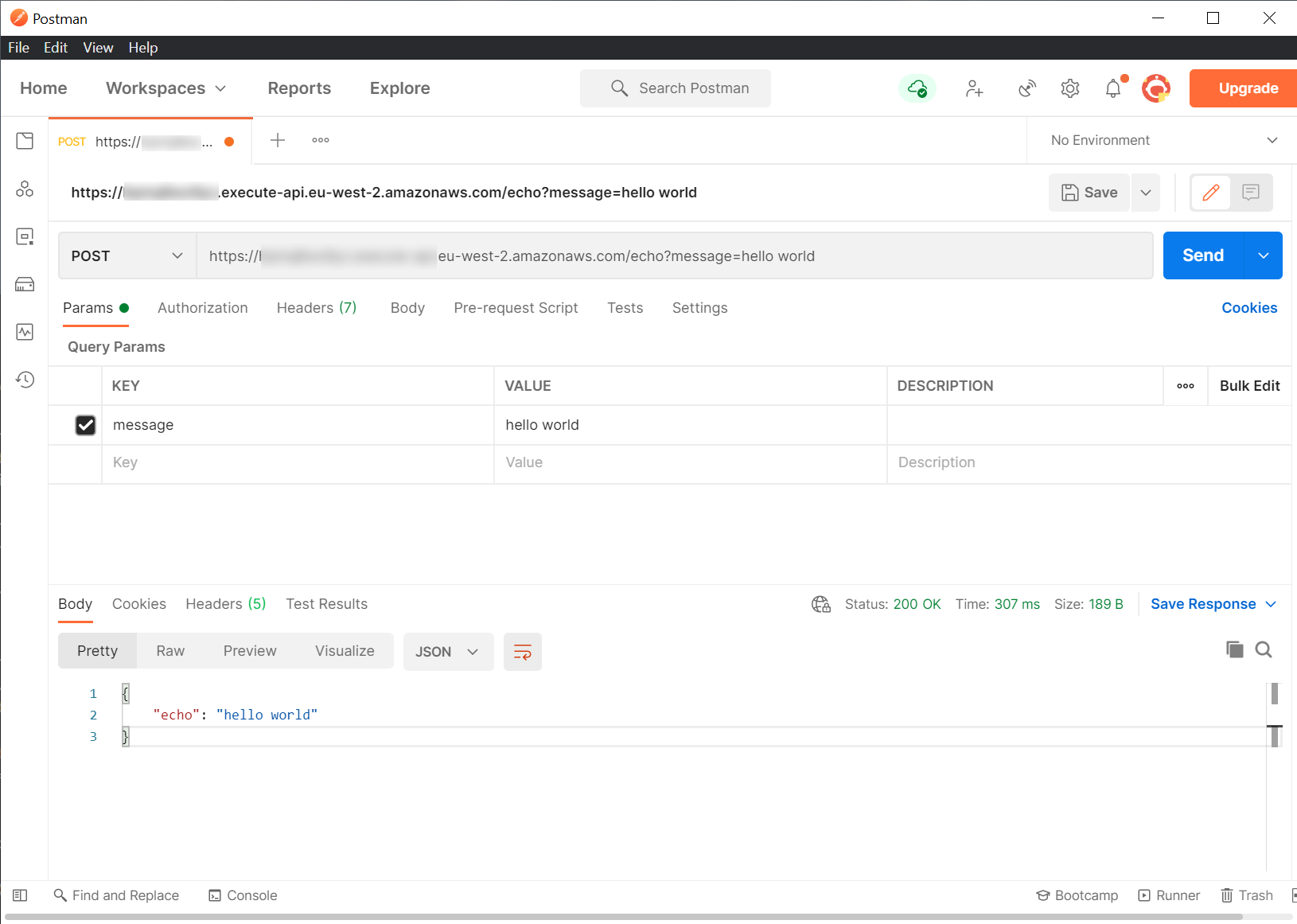

In theory that’s it and if we call our Lambda using the output URL we’ll get a response like the below:

That’s a 128Mb Lambda giving me a 307ms response including the cold start. Subsequent executions are around 25ms to 30ms. The best I could achieve using .NET was around 500ms with the cold start and 25ms to 30ms response times for subsequent executions but I had to deploy a Lambda with 2Gb of memory assigned. Because cost is proportionate to the resource consumed and the time run that meant my .NET Lambda costs 16x as much as my JavaScript Lambda created with F# and Fable - at scale that’s going to make a difference to your cloud bill. Obviously if you’re not worried about cold start then things are very different and there’s to performance than this simple function - but if your workload is IO bound… well - if nothing else its worth experimenting with for some of your more expensive workloads. My “real” Lambda that talks to Dynamo DB is running quite happily within a 128Mb Lambda and due to how Lambda scales there’s no reason to think that won’t continue as it scales up (see my post on this).

Automating deployment with a GitHub Action

With that running I wanted to automatically deploy things using a GitHub Action. I’m not going to cover this in any length here as it really does just wrap up everything above into a fairly simple Action. I deploy my preqrequisites, build the output, and deploy it with CDK.

If you want to look at it then you can find it in the repo here.

Conclusions

With a little bit of upfront work Fable really did let me have my cake and eat it. I’m typically seeing an end to end total request time of under 300ms on a cold start and I’m able to use F# to achieve it as part of an end to end F# solution. Time wise it only took me a couple of hours to assemble this solution (I was familiar at least enough with all the building blocks up front). I’m likely to build more Lambda’s in this fashion particularly when they directly form part of a customer facing workflow or call chain and as I do so I’ll likely expand my AWSLambda.Types module to include other events - I’ll publish those if and when I do.

Its worth also noting that if I was running at scale the lower overhead required by the JavaScript runtime compared to the .NET runtime would yield significant cost savings. My deployed Lambda requires literally 1/16 (sixteenth) the resource of the .NET lambda and that translates to a sixteenth the cost to run ($0.0000000021 per 1ms for my JavaScript Lambda compared to $0.0000000333 per 1ms for my .NET Lambda).

Hopefully future versions of .NET will drive the startup costs down - at the moment there’s quite a gulf between it and other popular runtimes. Its fair to say the .NET team has done magnificent work on performance in other areas of the runtime and so I’m optimistic this is mostly a question of priorities for them.

And finally, I’ve found CDK to be a great deployment tool - not only can I use a familiar language and popular runtime to create my infrastructure rather than struggling with markup or learning Yet Another Niche Language but it also covers deployment of assets. Here we deployed JavaScript code but deploying containers is generally as simple as saying “here’s my Dockerfile”. Its also pretty self-consistent and reusable and after my first project I’ve found I can create new things with ease and with a good level of predictability. I really wish Azure would look at this kind of approach - Bicep isn’t even skating to where the puck is never mind where its going to be.